Agents recheck context because skills alone don’t decide when to act—stable output needs a reusable decision standard (goals, constraints, state).

Quick Checklist

Is the agent’s goal explicit?

Are constraints written as rules (not vibes)?

Is there a current state snapshot?

Is there a decision loop (check → act → evaluate → update)?

Definition: What does it mean to “recheck context”?

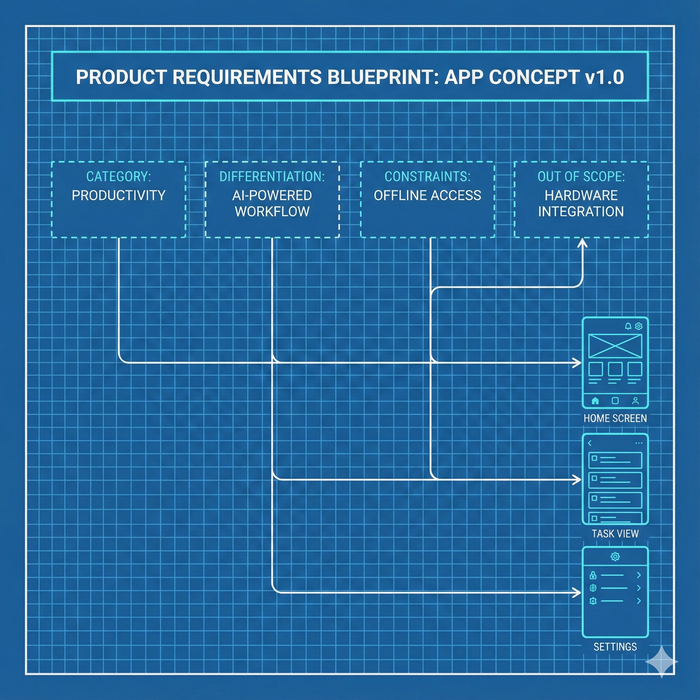

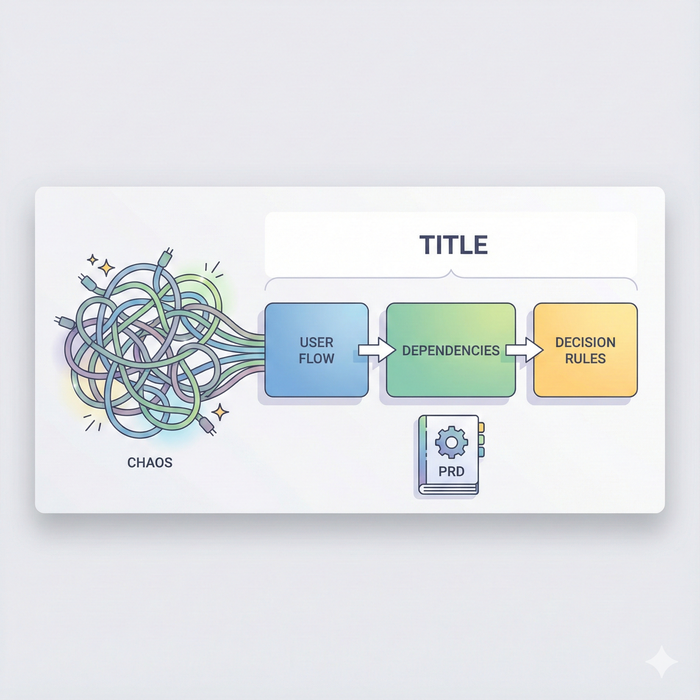

In modern agent designs, “context” isn’t one prompt. It’s the full decision frame: goal, constraints, previous decisions, and current state. Agents repeatedly return to it to ask: “Given what’s true now, is this still the right next move?” This pattern shows up in major agent paradigms that interleave reasoning with actions and incorporate reflection loops.

Decision Criteria: Why a single instruction isn’t enough

As agents gain skills (search, summarize, code, browse), instability increases if there’s no standard for:

-

which skill to use

-

when to stop

-

how to resolve conflicts

That’s why “structure” matters: ReAct interleaves reasoning and acting to reduce error cascades, and Reflexion adds self-reflection memory to improve across trials.